Table of Contents

Overview

YAC provides three routines for the initialisation of YAC (only C-Versions are listed here):

And two dummy initialisation routines:

Initialisation methods

MPI handshake

A detailed description of the MPI handshake algorithm can be found here.

This algorithm can be used to collectively generate the first set of communicators in a coupled run configuration. For example one communicator for each executable or for groups of executables.

The algorithm takes an array of group names as input and returns an array of communicators. Each of these communicators contains all processes that provided the same group name.

Default YAC initialisation

The default YAC initialisation routine first calls the MPI handshake for MPI_COMM_WORLD and provides "yac" as group name. Afterwards it calls yac_cinit_comm with this communicator.

YAC initialisation with MPI communicator

This initialisation routine takes an MPI communicator as input. All processes in this communicator have to call a YAC initialisation routine.

If any process in MPI_COMM_WORLD calls yac_cinit, the communicator passed to yac_cinit_comm has to be generated by the MPI handshake algorithm and the group name for this communicator has to be "yac". This is required in order to avoid a deadlock.

Dummy initialisation

As yac_cinit, the routine yac_cinit_dummy will also first call the MPI handshake algorithm. However, it will not provide the group name "yac". Therefore, these processes are not included in the YAC communicator and cannot call any other YAC routines, except for yac_cfinalize. The call to yac_cfinalize is only required, if MPI was not initialised before the call to yac_cinit_dummy.

This routine is useful for processes that want to exclude themselves from the coupling by YAC.

Dummy initialisation with YAC communicator

If a process is part of a YAC communicator (generated by the MPI handshake algorithm using the group name "yac"), it can still exclude itself from using YAC by calling yac_cinit_comm_dummy and provide this communicator.

Usage example

Coupled run configuration

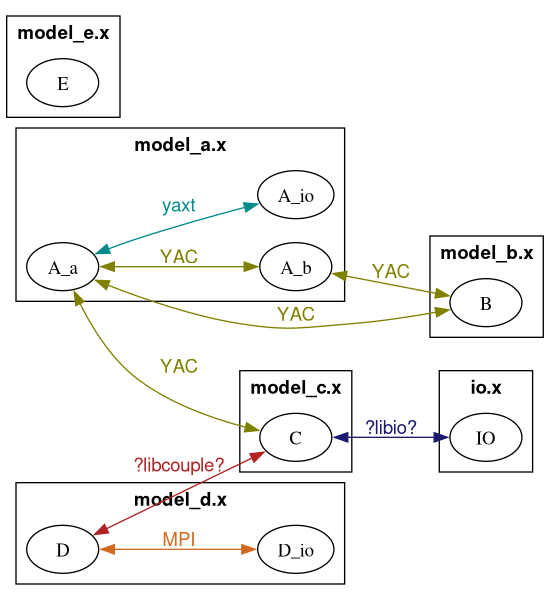

The following is an example for a coupled run configuration:

This configuration contains four different executable:

model_a.x- each process is part of one component (

A_a,A_b, orA_io) A_aandA_bexchange data using YACA_aandA_ioexchange data using the communication library yaxt

- each process is part of one component (

model_b.x- all processes belong to component

B BandA_aexchange data using YACBandA_bexchange data using YAC

- all processes belong to component

model_c.x- all processes belong to component

C CandA_aexchange data using YAC

- all processes belong to component

model_d.x- all processes belong to component

D - each process is part of one component (

DorD_io) DandCexchange data using a Coupler-libraryDandD_iocommunicate using MPI point-to-point communication

- all processes belong to component

model_e.x- all processes belong to component

E - does not communicate with other components through MPI

- all processes belong to component

io.x- all processes belong to component

IO IOandCexchange data using an IO-library

- all processes belong to component

Implementation

The generation of the MPI communicator and the initialisation of all libraries can be implemented as follows.

model_a.x

The components of this executable require the following communicators:

- a_comm

- contains all processes of

model_a.x

- contains all processes of

- yac_comm

- contains all processes using YAC

- a_io_comm

- contains all processes of components

A_aandA_io

- contains all processes of components

- a_a_comm, a_b_comm, io_comm

- contains all processes of component

A_a,A_b, orA_iorespectively

- contains all processes of component

model_b.x

The component of this executable requires the following communicator:

- b_comm

- contains all processes of component

B

- contains all processes of component

(The communicator yac_comm is not required, because all communication with model_a.x is done through YAC.)

model_c.x

The component of this executable requires the following communicator:

- yac_comm

- contains all processes using YAC

- libio_comm

- contains all processes using libio

- libcouple_comm

- contains all processes using libcouple

- c_comm

- contains all process of component

C

- contains all process of component

model_d.x

The components of this executable require the following communicators:

- d_io_comm

- contains all processes of

model_d.x

- contains all processes of

- libcouple_comm

- contains all processes using libcouple

- d_comm and io_comm

- contains all processes of components

DorD_iorespectively

- contains all processes of components

model_e.x

The components of this executable does not require any communicators.

io.x

The component of this executable requires the following communicator:

- libio_comm

- contains all processes using libio

Alternatively, if libio supports the same handshake algorithm, no additional communicator has to be generated.